The source of truth for how our function behaves belongs to the production environment. Netlify helps out a lot here with the ability to deploy branches into the production environment, which means when we PR code into our serverless project it can be deployed and we can integration test or manually review before merging.

This early version of a "testing in production" workflow that matches common mainstream workflows is very powerful.

Combining it with feature flags to enable/disable code paths and other mechanisms can lead to faster and safer deploys.

Before we deploy, when it comes to Rust, there are a few tools we use every time we compile that give us confidence our code is operating as we intend it to. Type checking with cargo check, linting with cargo clippy, benchmarking with cargo bench, and the final compilation itself all contribute to how much confidence we can have in our deployments.

We have one more tool we can set up and use: cargo test.

Cargo comes with built-in support for approaches like unit and integration testing.

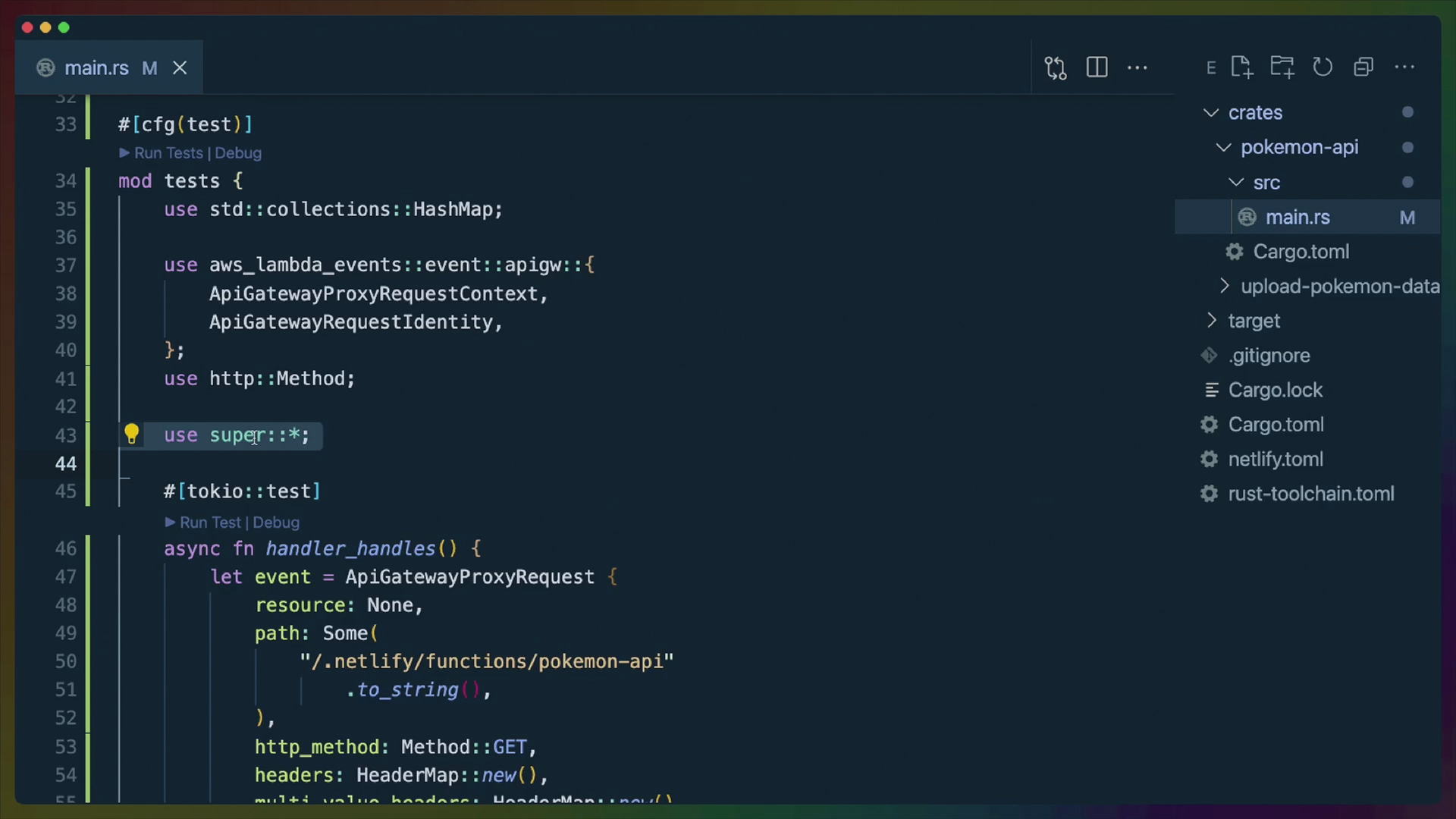

We can write a unit test for our serverless function that will allow us to build confidence that it handles event objects properly and gives us the return values we expect.

This will let us run our handler code locally, in CI, or elsewhere, simulating one event being processed.

#[cfg(test)]

mod tests {

use std::collections::HashMap;

use aws_lambda_events::event::apigw::{

ApiGatewayProxyRequestContext,

ApiGatewayRequestIdentity,

};

use http::Method;

use super::*;

#[tokio::test]

async fn handler_handles() {

let event = ApiGatewayProxyRequest {

resource: None,

path: Some(

"/.netlify/functions/pokemon-api"

.to_string(),

),

http_method: Method::GET,

headers: HeaderMap::new(),

multi_value_headers: HeaderMap::new(),

query_string_parameters: HashMap::new(),

multi_value_query_string_parameters:

HashMap::new(),

path_parameters: HashMap::new(),

stage_variables: HashMap::new(),

request_context:

ApiGatewayProxyRequestContext {

account_id: None,

resource_id: None,

operation_name: None,

stage: None,

domain_name: None,

domain_prefix: None,

request_id: None,

protocol: None,

identity: ApiGatewayRequestIdentity {

cognito_identity_pool_id: None,

account_id: None,

cognito_identity_id: None,

caller: None,

api_key: None,

api_key_id: None,

access_key: None,

source_ip: None,

cognito_authentication_type: None,

cognito_authentication_provider:

None,

user_arn: None,

user_agent: None,

user: None,

},

resource_path: None,

authorizer: HashMap::new(),

http_method: Method::GET,

request_time: None,

request_time_epoch: 0,

apiid: None,

},

body: None,

is_base64_encoded: Some(false),

};

assert_eq!(

handler(event.clone(), Context::default())

.await

.unwrap(),

ApiGatewayProxyResponse {

status_code: 200,

headers: HeaderMap::new(),

multi_value_headers: HeaderMap::new(),

body: Some(Body::Text("Boop".to_string())),

is_base64_encoded: Some(false),

}

)

}

}

The cfg macro lets us conditionally include source code in our compilation. In this case our test code only gets included if we're running cargo test as the test condition is only true if the Rust compiler is in "test mode", which cargo test sets.

There are quite a few conditions we can use in a cfg macro, such as for target operating systems, features, or architectures.

In this case we're conditionally include a submodule which we've called tests out of convention. The name of this submodule doesn't matter.

Rust modules don't necessarily reflect the file system and we can see that here, where tests is a sub-module that we define entirely in the same file as main.rs.

Because tests is a sub-module, we can bring additional items into scope that we only use in our tests. In this case, all of these items are used in the construction of a fake request.

use std::collections::HashMap;

use aws_lambda_events::event::apigw::{

ApiGatewayProxyRequestContext,

ApiGatewayRequestIdentity,

};

use http::Method;

It's incredibly useful for unit tests to be able to access any items defined in the parent module, so we use super::* to bring all of the items, such as handler into scope from the parent module.

handler is an async function, which means we need a tokio runtime. While Rust offers the #[test] macro to indicate tests, the tokio crate matches this with its own async version of the macro called tokio::test.

The test macro is basically a flag to the test runner to say "execute this function as a test", since test functions are really no different than regular functions. The tokio::test macro additionally sets up a tokio runtime for us. We can have control over what kind of runtime tokio uses in our tests if we want, including running as single-threaded, multi-threaded, or even starting with time stopped.

handler again is async, so our test needs to be an async function as well. Test functions accept no arguments and return either () (which is the default return value for all functions) or a Result. This is similar to how main works.

Once we're in our test, we can construct an event to mock the first argument to our handler. ApiGatewayProxyRequest does not implement the Default trait, so we do have to construct this entire struct ourself, which makes it a bit long. All values except the path and http_method are set to reasonable default values, such as None or an empty HashMap.

ApiGatewayProxyRequest {

resource: None,

path: Some(

"/.netlify/functions/pokemon-api"

.to_string(),

),

http_method: Method::GET,

headers: HeaderMap::new(),

multi_value_headers: HeaderMap::new(),

query_string_parameters: HashMap::new(),

multi_value_query_string_parameters:

HashMap::new(),

path_parameters: HashMap::new(),

stage_variables: HashMap::new(),

request_context:

ApiGatewayProxyRequestContext {

account_id: None,

resource_id: None,

operation_name: None,

stage: None,

domain_name: None,

domain_prefix: None,

request_id: None,

protocol: None,

identity: ApiGatewayRequestIdentity {

cognito_identity_pool_id: None,

account_id: None,

cognito_identity_id: None,

caller: None,

api_key: None,

api_key_id: None,

access_key: None,

source_ip: None,

cognito_authentication_type: None,

cognito_authentication_provider:

None,

user_arn: None,

user_agent: None,

user: None,

},

resource_path: None,

authorizer: HashMap::new(),

http_method: Method::GET,

request_time: None,

request_time_epoch: 0,

apiid: None,

},

body: None,

is_base64_encoded: Some(false),

};

It will be useful to write some helper functions to construct fake request objects if we write more tests.

Rust provides a few useful helper macros for writing tests. One of these is assert_eq, which we'll use to test the result of the handler against a value we construct.

assert_eq!(

handler(event.clone(), Context::default())

.await

.unwrap(),

ApiGatewayProxyResponse {

status_code: 200,

headers: HeaderMap::new(),

multi_value_headers: HeaderMap::new(),

body: Some(Body::Text("Boop".to_string())),

is_base64_encoded: Some(false),

}

)

The actual handler call accepts the event we created and a Context. Luckily for us Context implements the Default trait, which means we can use that to construct a valid value.

We have to await the handler since it's an async function, and then we unwrap the Result return value which will panic and fail the test if it is an Err.

On the right hand side we can construct a new Value that is the API response we expect.

Then running cargo test will find this test and run it for us.

cargo test -p pokemon-api

The output will look something like this

running 1 test

test tests::handler_handles ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s