Testing non-interactive CLI tools is pretty straightforward: run the CLI and check the output.

Testing CLI tools that require user input is a bit harder and we’ll need a few dependencies. These dependencies also don’t work cross-platform! So beware if you’re on Windows for this lesson, the rexpect crate will not compile unless you’re on WSL or a *nix vm.

❯ cargo add rexpect predicates assert_fs --dev

Updating crates.io index

Adding rexpect v0.5.0 to dev-dependencies.

Adding predicates v3.0.3 to dev-dependencies.

Features:

+ color

+ diff

+ float-cmp

+ normalize-line-endings

+ regex

- unstable

Adding assert_fs v1.0.13 to dev-dependencies.

Features:

- color

- color-auto

Updating crates.io index

rexpect is a crate that allows us to assert against the output of an interactive CLI, and send input back to it.

assert_fs allows us to test a directory for the creation of different files and their contents. It also allows us to create temporary directories for our tests, which is really important for not cluttering our codebase with generated test files.

predicates allows us to create test functions to use to test for the existence of files or other aspects.

Our Cargo.toml has a few additional dev dependencies now.

[package]

name = "garden"

version = "0.1.0"

edition = "2021"

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

[dependencies]

clap = { version = "4.3.19", features = ["env", "derive"] }

directories = "5.0.1"

edit = "0.1.4"

miette = { version = "5.10.0", features = ["fancy"] }

owo-colors = "3.5.0"

rprompt = "2.0.2"

slug = "0.1.4"

tempfile = "3.7.0"

thiserror = "1.0.44"

[dev-dependencies]

assert_cmd = "2.0.12"

assert_fs = "1.0.13"

predicates = "3.0.3"

rexpect = "0.5.0"

We can make sure the rexpect crate won’t try to compile on windows using target.cfg*.dev-dependencies, since it would break the compilation anyway.

[dev-dependencies]

assert_cmd = "2.0.12"

assert_fs = "1.0.13"

predicates = "3.0.3"

[target.'cfg(not(windows))'.dev-dependencies]

rexpect = "0.5.0"

We’ll bring in the assert_fs and predicates preludes. A prelude is a convention that is a re-export of a lot of the items you’d typically use in an application. This makes it easier to get started using a particular crate, and you can always trim down the imports later if you want.

We also use rexpect's spawn_command and the Rust stdlib’s Command.

use assert_fs::prelude::*;

use predicates::prelude::*;

use rexpect::session::spawn_command;

use std::process::Command;

and here’s the full test we’ll write with them.

#[test]

fn test_write_with_title() -> Result<(), Box<dyn Error>> {

let temp_dir = assert_fs::TempDir::new()?;

let bin_path = assert_cmd::cargo::cargo_bin("garden");

let fake_editor_path = std::env::current_dir()?

.join("tests")

.join("fake-editor.sh");

if !fake_editor_path.exists() {

panic!(

"fake editor shell script could not be found"

)

}

let mut cmd = Command::new(bin_path);

cmd.env(

"EDITOR",

fake_editor_path.into_os_string(),

)

.env("GARDEN_PATH", temp_dir.path())

.arg("write")

.arg("-t")

.arg("atitle");

let mut process = spawn_command(cmd, Some(30000))?;

process.exp_string("current title: ")?;

process.exp_string("atitle")?;

process.exp_regex("\\s*")?;

process.exp_string(

"Do you want a different title? (y/N): ",

)?;

process.send_line("N")?;

process.exp_eof()?;

temp_dir

.child("atitle.md")

.assert(predicate::path::exists());

Ok(())

}

Box in tests

We start off by writing a new test function with the #[test] attribute.

Test functions are allowed to return Results, and errors will fail the test just like panics will.

Using a Result in a test signature is useful because it allows us to clean up code that would otherwise have .unwrap() in a lot of places. We get to use ? instead, which is much more concise and out of the way, allowing us to focus on our test code.

#[test]

fn test_write_with_title() -> Result<(), Box<dyn Error>> {

...

}

The other big feature in this signature is Box<dyn Error>, which makes use of two Rust idioms: Boxing values and trait objects.

the tldr; is that this type signature has the effect of saying “you can use any type that implements the Error trait here”, at the cost of not being able to tell at compile time which error values and types will be used.

Its effectively dynamic typing for errors.

In our library code, we’d prefer to know which errors can occur at compile time, but in tests it doesn’t matter because they all get printed out when the test fails and nothing else is done with them.

We don’t need to match on the error returned from a failing test.

You can read more about the technical details in the Box and dyn docs.

Because we can return any error, we can use ? on any error without needing to convert it into anything.

Temporary directories

Our CLI writes out files.

We have to pick a place to put those files when we run tests.

Ideally this place would be temporary and isolated to the test. It would be created when the test started and cleaned up when the test stopped.

If it wasn’t cleaned up when the test stopped, it should also exist in a place on the filesystem where the operating system will clean it up periodically so we don’t infinite create a bunch of junk on the filesystem.

This is TempDir from assert_fs.

let temp_dir = assert_fs::TempDir::new()?;

Getting the cargo binary path

We also need the path to our binary after its been compiled so we can launch it for the test.

assert_cmd has a function for that as well: cargo_bin

let bin_path = assert_cmd::cargo::cargo_bin("garden");

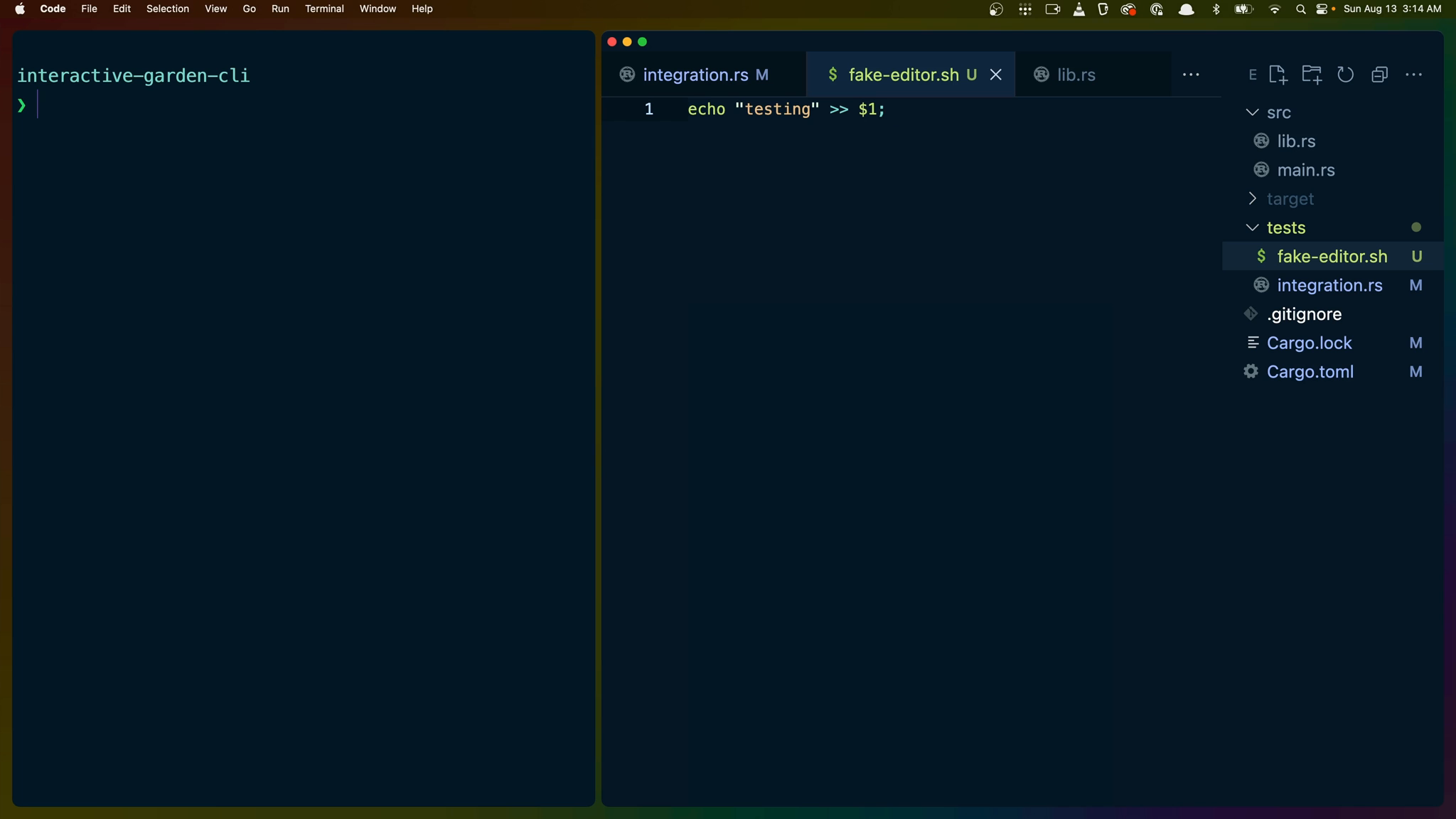

faking the user’s editor

The garden CLI passes control to the user’s editor of choice before continuing…

How do we do that in a test?

Any editor is a CLI program, just like any other program. So we can create our own editor and call it.

Luckily our “editor” can write the same string to a file every time we call it. The user’s editor gets called with the file path it needs to modify, so we can write a bash script that echo’s the string "testing" to the file we’re given.

>> in bash will append the output to a file, which means we’ll get # testing in our file since we already have some template content in it.

$1 is the first argument in bash, which in our case is the filename.

echo "testing" >> $1;

put this “fake editor” in tests/fake-editor.sh.

Getting a filepath in tests

We still need to find the path to our fake editor to be able to use it. current_dir will return us the current working directory, which in our test case will be the root of our project.

We then add tests and fake-editor.sh to the end of the current working directory PathBuf.

PathBuf includes the exists function, which will let us perform a quick check to make sure the fake editor script exists at this point. If it doesn’t, we’ve messed something up and should panic.

let fake_editor_path = std::env::current_dir()?

.join("tests")

.join("fake-editor.sh");

if !fake_editor_path.exists() {

panic!(

"fake editor shell script could not be found"

)

}

Bootstrapping our binary

Our binary needs a certain set of environment variables and arguments to run.

std::process::Command lets us create a new command with our binary path, which we can then add environment variables and arguments to.

Our fake editor is put in place using the EDITOR variable and the path to the fake editor script.

The GARDEN_PATH is the temporary directory we created earlier.

The arguments can be given one by one, resulting in write -t atitle being passed to our binary.

let mut cmd = Command::new(bin_path);

cmd.env(

"EDITOR",

fake_editor_path.into_os_string(),

)

.env("GARDEN_PATH", temp_dir.path())

.arg("write")

.arg("-t")

.arg("atitle");

Spawning the process and interacting with it

We have the command we want to run, and we can actually start running it using rexpect. The spawn_command (see spawn for the documentation) function accepts the command we just bootstrapped and a timeout, which we’ve set to Some(30000), which is 30 seconds.

We should never hit this timeout, but if any of our exp functions take longer than 30 seconds, our test will actually fail instead of hanging forever.

With our process spawned, we can continue to expect that there are certain strings or regex patterns in the output.

Each expectation “eats” the output, so we’re moving forward in the string each time we .exp* something.

The string expectations here are straitforward. They’re literally matching the strings shows.

The regex match here matches “any amount of whitespace” to account for the newlines in our output.

After testing to make sure the output is as we expect, we can interact with the process using send_line, which we use to send N back to the program interactively.

Finally, we use exp_eof to expect that the program has successfully exited and finished.

let mut process = spawn_command(cmd, Some(30000))?;

process.exp_string("current title: ")?;

process.exp_string("atitle")?;

process.exp_regex("\\s*")?;

process.exp_string(

"Do you want a different title? (y/N): ",

)?;

process.send_line("N")?;

process.exp_eof()?;

Testing the output

Assuming our CLI has successfully ran, we still need to test the output of our CLI, which should be a file in the garden.

TempDir implements the PathChild trait, which gives us the [child](https://docs.rs/assert_fs/1.0.13/assert_fs/fixture/trait.PathChild.html#tymethod.child) function.

We can use this to access the file we expect will exist in the temporary directory.

After getting the path to the child, we also need to assert something about the file. In this case we use the predicate crate to test to make sure the file exists.

We don’t really see the power of the predicate crate here so you can think of it as “a collection of functions that contains common assertions”.

temp_dir

.child("atitle.md")

.assert(predicate::path::exists());

Ok

Finally if all goes well, we return Ok(()) to pass the test, because we typed the test’s function signature to return a Result.

Cargo test

Running cargo test now runs the test, but fails.

❯ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.04s

Running unittests src/lib.rs (target/debug/deps/garden-fbdefd90ecb77d73)

running 3 tests

test tests::title_from_content_no_title ... ok

test tests::title_from_content_string ... ok

test tests::title_from_empty_string ... ok

test result: ok. 3 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running unittests src/main.rs (target/debug/deps/garden-d14d20b1389ed959)

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running tests/integration.rs (target/debug/deps/integration-b19d67cc51afbb48)

running 3 tests

test test_write_help ... ok

test test_help ... ok

test test_write_with_title ... FAILED

failures:

---- test_write_with_title stdout ----

Error: EOF { expected: "\"current title: \"", got: "Error: \u{1b}[31mgarden::io_error\u{1b}[0m\r\n\r\n \u{1b}[31mÃ\u{97}\u{1b}[0m garden::write\r\n\u{1b}[31m â\u{95}°â\u{94}\u{80}â\u{96}¶ \u{1b}[0mPermission denied (os error 13)\r\n\r\n", exit_code: None }

failures:

test_write_with_title

test result: FAILED. 2 passed; 1 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.11s

error: test failed, to rerun pass `--test integration`

There are two issues here.

You may be able to read through the junk in this output and find the actual reason our test has failed, but lets fix the junk first.

We’ve immediately run into the issue that we included colors in our application!

Colors are printed as codes in the terminal, so we need to be able to conditionally turn the colors off for tests.

Disabling colors in tests

owo-colors supports turning coloring off through the supports-colors feature. Update Cargo.toml to look like this.

owo-colors = { version = "3.5.0", features = ["supports-colors"] }

owo-colors offers a function named if_supports_color that we can use to test if the output destination we’re going to use supports colors, and if so then apply styling.

Its more verbose than just calling the functions .blue().bold() on our input strings, but it gives us the ability to turn colors on and off in our output.

fn ask_for_filename() -> io::Result<String> {

rprompt::prompt_reply(

"Enter filename

> "

.if_supports_color(

owo_colors::Stream::Stdout,

|text| text.style(Style::new().blue().bold()),

),

)

}

and in confirm_filename:

let result = rprompt::prompt_reply(&format!(

"current title: {}

Do you want a different title? (y/{}): ",

&raw_title.if_supports_color(

owo_colors::Stream::Stdout,

|text| text

.style(Style::new().green().bold())

),

"N".if_supports_color(

owo_colors::Stream::Stdout,

|text| text.style(Style::new().bold())

),

))?;

In our test, we can add another .env to our command setup: NO_COLOR. This will turn off colors for this execution of our command.

.env("GARDEN_PATH", temp_dir.path())

.env("NO_COLOR", "true")

.arg("write")

The output is now easier for us to read without the colors

❯ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.16s

Running unittests src/lib.rs (target/debug/deps/garden-32ef13859f30a58d)

running 3 tests

test tests::title_from_empty_string ... ok

test tests::title_from_content_string ... ok

test tests::title_from_content_no_title ... ok

test result: ok. 3 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running unittests src/main.rs (target/debug/deps/garden-3738e58180b4ff04)

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running tests/integration.rs (target/debug/deps/integration-0a0ea84a86a8f7b7)

running 3 tests

test test_help ... ok

test test_write_help ... ok

test test_write_with_title ... FAILED

failures:

---- test_write_with_title stdout ----

Error: EOF { expected: "\"current title: \"", got: "Error: garden::io_error\r\n\r\n Ã\u{97} garden::write\r\n â\u{95}°â\u{94}\u{80}â\u{96}¶ Permission denied (os error 13)\r\n\r\n", exit_code: None }

failures:

test_write_with_title

test result: FAILED. 2 passed; 1 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.11s

error: test failed, to rerun pass `--test integration`

Permission Denied

The error is Permission denied (os error 13).

This is because we need to add executable permissions to our fake editor.

chmod +x tests/fake-editor.sh

And now our test is passing!

❯ cargo test

Finished test [unoptimized + debuginfo] target(s) in 0.14s

Running unittests src/lib.rs (target/debug/deps/garden-32ef13859f30a58d)

running 3 tests

test tests::title_from_empty_string ... ok

test tests::title_from_content_no_title ... ok

test tests::title_from_content_string ... ok

test result: ok. 3 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running unittests src/main.rs (target/debug/deps/garden-3738e58180b4ff04)

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running tests/integration.rs (target/debug/deps/integration-0a0ea84a86a8f7b7)

running 3 tests

test test_write_help ... ok

test test_help ... ok

test test_write_with_title ... ok

test result: ok. 3 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.22s

Doc-tests garden

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Windows

Finally, we don’t want this test to run on windows, and we don’t want to use rexpect on windows either, since that will fail.

We can use the cfg attribute macro to test to see if the target os is windows, and invert the test with not.

cfg will include the item below it if the test succeeds, so the test and the use will both only be included if the target platform is not windows.

#[cfg(not(target_os = "windows"))]

use rexpect::session::spawn_command;

#[cfg(not(target_os = "windows"))]

#[test]

fn test_write_with_title() -> Result<(), Box<dyn Error>> {

...

}